Hello Friends,

Welcome to passionforcs!!!

In previous article, we have seen what is cost function? if you haven't checked please find to get the detail about the cost function and make it clear.

In this article, we are going to learn formula of cost function. How to calculate cost function?

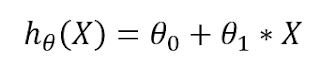

Let's briefly revise once what is cost function? cost function is the function to check whether the model works well or not. To check whether the model performs well or not we have hypothesis, i.e. our prediction. Now the question is How to get hypothesis?

Now, you can get your prediction through the above equation. But here the question is what is theta (ϴ)?

So the answer is, ϴ is an additional parameter.

Now we have one more question, i.e. why? what is the need of the additional parameter?

Let me give you an example so you can get easily,

Consider our previous example, stock price prediction now we just analyze the stock or share price and make our prediction. We can set an input feature vector or simply consider one input feature only here, the input is opening price, closing price, high and low price etc. etc. but additional parameter that we need in this example is our money i.e. how much amount you want to invest? through which we can get how much profit or loss I will have? this investment is additional parameter we can say because it depends on us i.e. investor.

NOTE: Human brain predict like the above example.

Now, let's consider the formula of cost function.

the above formula is the formula of cost function of linear regression. This is also known as squared error cost function.

Here, 1/2 * m - m is number of samples in data or training set. 1/2 is used because when we take derivative of cost function to update the parameter during gradient descent at that time square (2 in the power) get cancelled with 1/2 multiplier thus derivation is cleaner.

Sigma (𝚺) - represents the sum.

hϴ(x(i)) - hypothesis prediction of input x at ith index. (hypothesis value of h(x) if we use single value)

y(i) - the actual label value for the input x at ith index.

Just subtract actual value i.e. y from hypothesis prediction (predicted value) i.e. h(x) and square the achieved result.

This is just the calculation of cost function with MSE i.e. Mean Squared Error. We will learn more with example.

Till then try to get some cost by putting some values of x and theta.

Stay Safe Anywhere. :)

.png)

No comments:

Post a Comment